On Performance

Ahh, performance… It’s so much fun! It’s easy to measure and as you work on it you can see immediate benefits (or not!). I personally enjoy getting to spend time looking at interesting performance issues and I’ll use this as a time to plug IronPython 2.6 Beta 1 where we’ve continued our focus in this area. Even more fun yet is when you compare and contrast different implementations - it can be both surprising and amusing. Recently Robert Smallshire has been doing just that: https://www.smallshire.org.uk/sufficientlysmall/2009/05/22/ironpython-2-0-and-jython-2-5-performance-compared-to-python-2-5/ Obviously Robert has found a case in IronPython where something is performing horribly. I’d like to take a look at what’s happening here and what we can do to improve this case.

But before I look at what’s going on here I’d like to briefly say we love to hear feedback from users of IronPython. We’ve have a mailing list you can join and give us your feedback on and bugs can be reported on our website. We track both of these very closely and respond as quickly as we can – which is usually pretty fast. I just mention that because it’s easier for us to improve IronPython when people let us know about a problem vs. us having to scour the web to find issues. Hopefully this piqued interest will have more people trying out IronPython and giving us feedback.

Ok, but let’s get onto the fun technical details! It all comes down to one line of code “Node.counter += 1”. That’s a pretty innocent looking piece of code, how can it cause so many problems? First you need to understand a little bit about how IronPython works. IronPython internally keeps versions of type objects and uses this information to generate optimal code paths for accessing those objects. So the next line of code in this example, “self._children = children” usually turns into something that’s like:

if (self != null && self.GetType() == typeof(PyObject) && self.PythonType.Version == 42) {

self._dict["_children"] = <new value>;

}

What that one line of code causes then is for the version of the type to change and for this “rule” to be invalidated. So we’ll figure out what to do, generate a new cached rule, only to have it not work the next time around. The same thing applies to the calls to Node – we re-generate the “optimal” code again and again only to discover we have to throw it away.

So that sounds pretty bad but if you’re familiar with Python’s member access rules you’ll quickly recognize that this will be much faster than needing to do the resolution again and again. That involves looking through the class hierarchy to see if we have any data descriptors or classes defining __setattr__ and it's nice to avoid all of that work. So to get the best performance we require for the world to settle down and not be continuously changing. We’re well aware of this problem with our implementation and we have continued to focus on improving it. IronPython 2.0 already includes one mitigation so that we don’t need to continuously generate new code – instead we effectively patch new values into an existing piece of code. In our IronPython 2.6 branch we’ve continued to improve performance here and significantly reduced the expense when things get mutated. But it turns out that there’s an easy fix for this particular problem we’ve just never thought it that important of a problem to solve.

One of the amusing things about this is we’ve actually seen it before – Resolver Systems was having some performance issues with Resolver One which is their advanced spreadsheet which is written in IronPython. One of those issues turned to be a type which they both used frequently and mutated frequently. This actually doesn’t seem to be a common idiom in Python code which is the reason we’ve never optimized for it before. In this case though it turned out that they had found an optimization that improved performance on IronPython 1.0 but was destroying perf on IronPython 2.0! For Resolver fixing this was a simple change for them to no longer mutate types. Even if you really want to use a type as a property bag you can always move that to a type which you aren’t creating instances of and executing methods on.

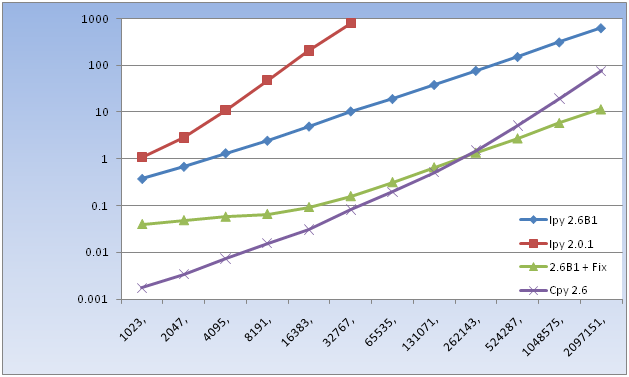

With all that in mind let’s look at some numbers. Here I’ve compared IronPython 2.01, IronPython 2.6 Beta 1, CPython 2.6 plus IronPython 2.6 with a fix for this issue.

Like Robert’s charts I have tree size in number of nodes across the bottom and execution time in seconds across the top and the execution times are logarithmic. We can see the abysmal performance of 2.0.1, we can see that 2.6B1 has already made significant improvements, and with IronPython 2.6B1 plus a fix for this specific issue we actually beat CPython as the number of iterations scales up.

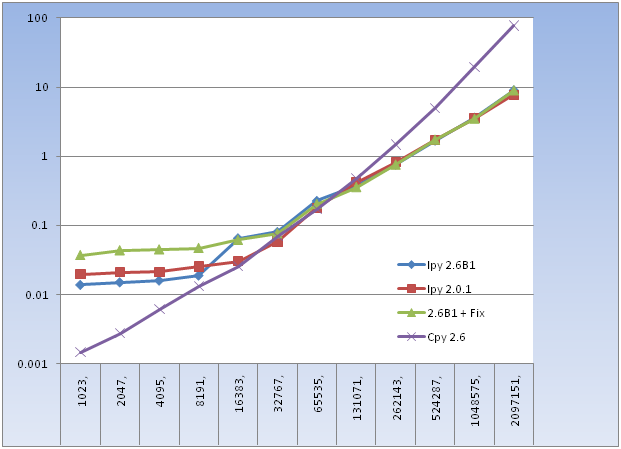

But there’s another way we can look at this – instead of fixing IronPython let’s look what happens if we fix the benchmark to avoid this performance pitfall. To do this I’ve replaced the mutation of the class with a global variable. When running against all of the same implementations again I get these results:

Here you can see that all 3 versions of IronPython are performing about the same and are coming in significantly faster than CPython as the number of iterations goes up. These are now also matching the numbers Robert is getting when he has made the same change.

So you might be curious - what’s the fix? Well in this case we’re just mutating the type by changing a simple value stored in the type (versus say adding a property or a method to the type). Today we get a very slight throughput performance benefit because we can burn the value directly into the generated code instead of fetching it from the wrapper the type object keeps it in. With the fix we’ll burn the wrapper in and fetch the value from the wrapper each time. Then when we go and modify the type we just modify the wrapper instead of the type and its version is unchanged! This fix is now checked into the IronPython source tree and you can download the fixed sources right now.

In conclusion I’d like to wrap up a little bit about our performance philosophy – performance is hard and deciding which numbers matter to you is important. We have tried to tune IronPython for apps which run for more than a fraction of a second. We have also tuned IronPython for code which is not constantly mutating types at runtime. And we’re always open to feedback when users encounter problems with IronPython and we’ll listen to what scenarios are important for them. But if you look at these graphs I think you can see that we’ve done a good job at making IronPython a fast implementation of Python.

Comments

Anonymous

May 22, 2009

PingBack from http://microsoft-sharepoint.simplynetdev.com/on-performance-2/Anonymous

May 23, 2009

The comment has been removed