I've chacked in on some different algorithms and the issue appears when i'm using n-grams block for getting features. When i'm using feature hashing for example it looks like working well.

Azure ML real-time inference endpoint deloyment stuck on transitioning status

I can't use ml real-time inference endpoint becouse it's stuck on transitioning status (more than 20 hours). Could you help me with that?

10 additional answers

Sort by: Most helpful

-

Mateusz Orzymkowski 96 Reputation points

2020-09-18T10:44:58.407+00:00 Unfortunately re-try not helps.

There is bearer token auth enabled.

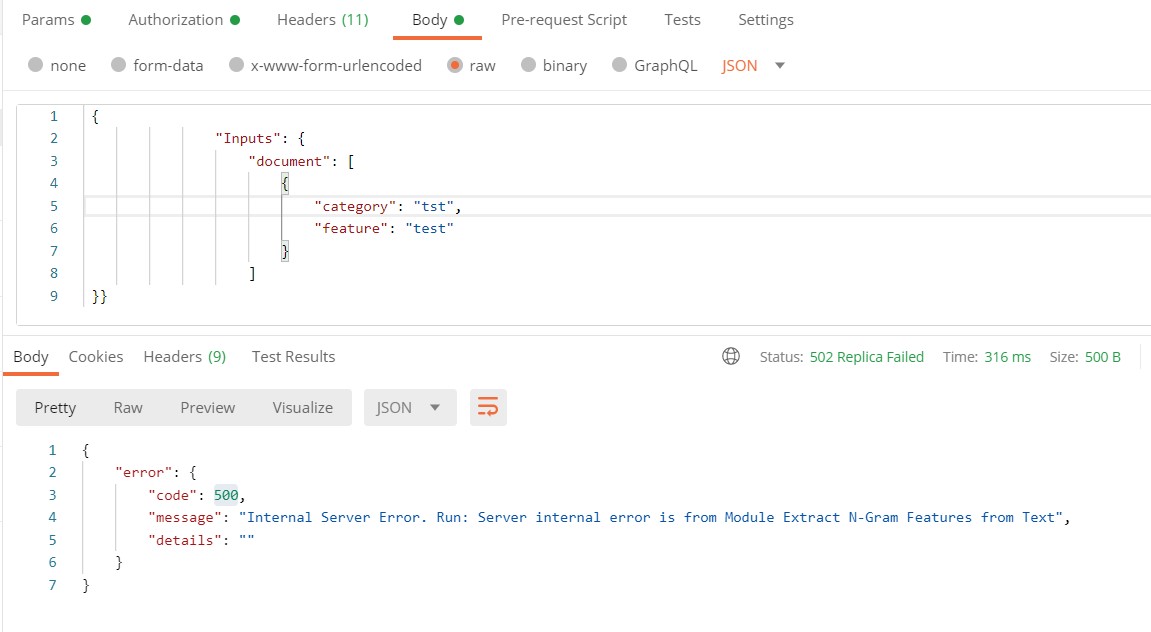

And error from postman depends on request body.First example:

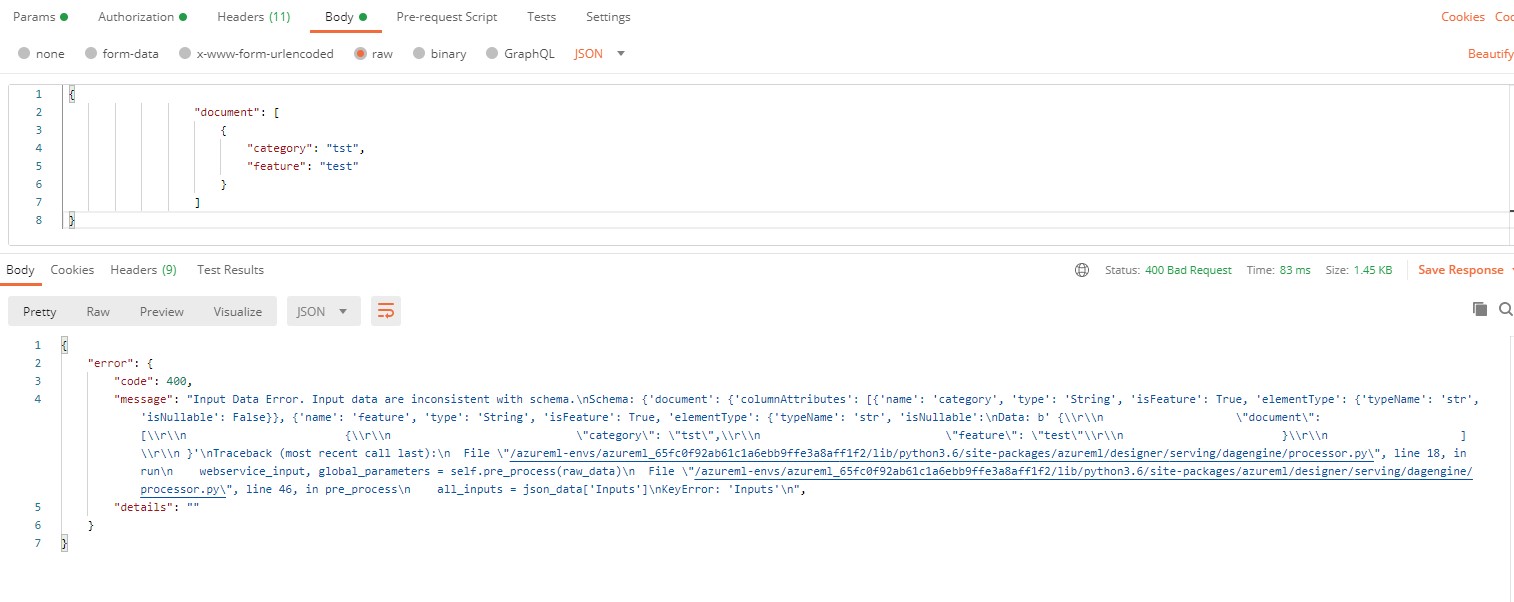

Second example:

In second case it looks like i've made some mistake in request body, but in first response i'm getting error from typical server site. Or maybe i've made mistake in body in both cases? Could you help me with that?

Thank you in advance!

-

April Frommer 1 Reputation point

2020-09-18T14:17:54.91+00:00 I was also able to get Healthy status after republishing my endpoint. However, I am also receiving the bad gateway message.

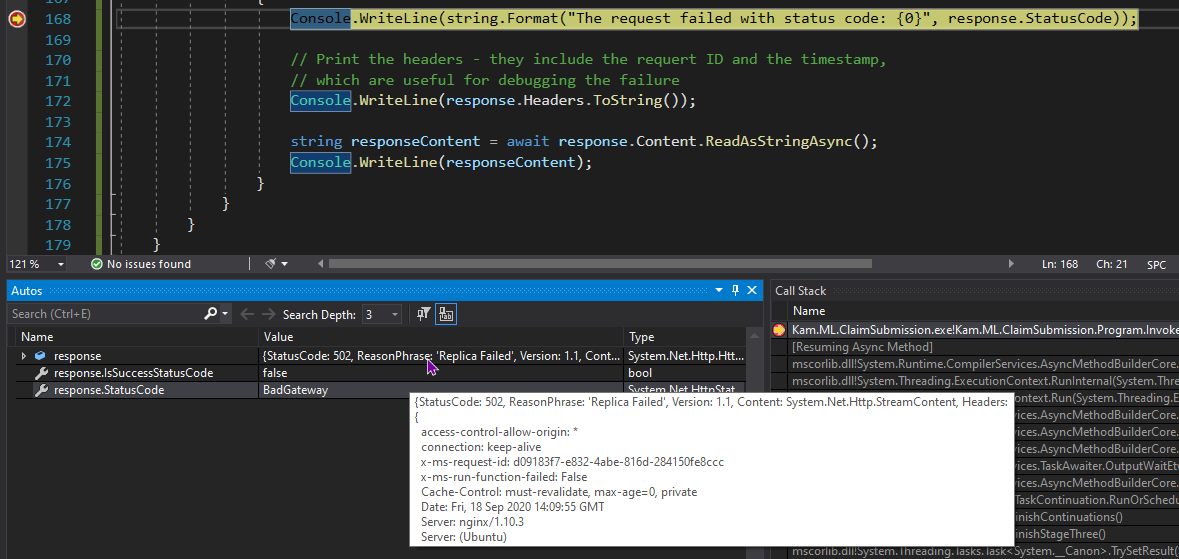

Here's a screenshot from my console app where I am receiving the error.