Events

Mar 31, 11 PM - Apr 2, 11 PM

The ultimate Microsoft Fabric, Power BI, SQL, and AI community-led event. March 31 to April 2, 2025.

Register todayThis browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

This article is intended to help architects and developers make sound design decisions when they implement integration scenarios.

The article describes integration patterns, integration scenarios, and integration solutions and best practices. However, it doesn't include technical details about how to use or set up every integration pattern. It also doesn't include sample integration code.

Note

When providing guidance and discussing scenarios for choosing a pattern, data volume numbers are mentioned. These numbers must be used only to gauge the pattern and must not be considered as hard system limits. The absolute numbers will vary in real production environments due to various factors, configurations are only one aspect of this scenario.

The following table lists the integration patterns that are available.

| Pattern | Documentation |

|---|---|

| Power Platform integration | Microsoft Power Platform integration with finance and operations apps |

| OData | Open Data Protocol (OData) |

| Batch data API | Recurring integrations Data management package REST API |

| Custom service | Custom service development |

| Consume external web services | Consume external web services |

| Excel integration | Office integration overview |

Note

For on premise deployments, the only supported API is the Data management package REST API. This is currently available on 7.2, platform update 12 build 7.0.4709.41184.

Processing can be either synchronous or asynchronous. Often, the type of processing that you must use determines the integration pattern that you choose.

A synchronous pattern is a blocking request and response pattern, where the caller is blocked until the callee has finished running and gives a response. An asynchronous pattern is a non-blocking pattern, where the caller submits the request and then continues without waiting for a response.

The following table lists the inbound integration patterns that are available.

| Pattern | Timing | Batch |

|---|---|---|

| OData | Synchronous | No |

| Batch data API | Asynchronous | Yes |

Before you compare synchronous and asynchronous patterns, you should be aware that all the REST and SOAP integration application programming interfaces (APIs) can be invoked either synchronously or asynchronously.

The following examples illustrate this point. You can't assume that the caller will be blocked when the Open Data Protocol (OData) is used for integration. The caller might not be blocked, depending on how a call is made.

| Pattern | Synchronous programming paradigm | Asynchronous programming paradigm |

|---|---|---|

| OData | DbResourceContextSaveChanges | DbResourceContextSaveChangesAsync |

| Custom service | httpRequestGetResponse | httpRequestBeginGetResponse |

| SOAP | UserSessionServiceGetUserSessionInfo | UserSessionServiceGetUserSessionInfoAsync |

| Batch data API | ImportFromPackage | BeginInvoke |

Both OData and custom services are synchronous integration patterns, because when these APIs are called, business logic is immediately run. Here are some examples:

Batch data APIs are considered asynchronous integration patterns, because when these APIs are called, data is imported or exported in batch mode. For example, calls to the ImportFromPackage API can be synchronous. However, the API schedules a batch job to import only a specific data package. The scheduling job is quickly returned, and the work is done later in a batch job. Therefore, batch data APIs are categorized as asynchronous.

Batch data APIs are designed to handle large-volume data imports and exports. It's difficult to define what exactly qualifies as a large volume. The answer depends on the entity, and on the amount of business logic that is run during import or export. However, here is a rule of thumb: If the volume is more than a few hundred thousand records, you should use the batch data API for integrations.

In general, when you're trying to choose an integration pattern, we recommend that you consider the following questions:

When you use a synchronous pattern, success or failure responses are returned to the caller. For example, when an OData call is used to insert sales orders, if a sales order line has a bad reference to a product that doesn't exist, the response that the caller receives contains an error message. The caller is responsible for handling any errors in the response.

When you use an asynchronous pattern, the caller receives an immediate response that indicates whether the scheduling call was successful. The caller is responsible for handling any errors in the response. After scheduling is done, the status of the data import or export isn't pushed to the caller. The caller must poll for the result of the corresponding import or export process, and must handle any errors accordingly.

Here are some typical scenarios that use OData integrations.

Note

Use of OData for Power BI reports is discouraged. Using entity store for such scenarios is encouraged.

A manufacturer defines and configures its product by using a third-party application that is hosted on-premises. This manufacturer wants to move its production information from the on-premises application to finance and operations. When a product is defined, or when it's changed in the on-premises application, the user should see the same change, in real time.

| Decision | Information |

|---|---|

| Is real-time data required? | Yes |

| Peak data volume | 1,000 records per hour* |

| Frequency | Ad hoc |

Occasionally, many new or modified production configurations will occur in a short time.

This scenario is best implemented by using the OData service endpoints to create and update product information in finance and operations.

In finance and operations:

In the third-party application:

A company has a self-hosted customer portal where customers can check the status of their orders. Order status information is maintained in the application.

| Decision | Information |

|---|---|

| Is real-time data required? | Yes |

| Peak data volume | 5,000 records per hour |

| Frequency | Ad hoc |

This scenario is best implemented by using the OData service endpoints to read order status information.

In finance and operations:

On the customer portal site:

A company uses a product lifecycle management (PLM) system that is hosted on-premises. The PLM system has a workflow that sends the finished bill of materials (BOM) information to the application for approval.

| Decision | Information |

|---|---|

| Is real-time data required? | Yes |

| Peak data volume | 1,000 records per hour |

| Frequency | Ad hoc |

This scenario can be implemented by using an OData action.

In finance and operations:

In the PLM solution:

Note

You can find an example of this type of OData action in BOMBillOfMaterialsHeaderEntity::approve.

Here are some typical scenarios that use a custom service.

An energy company has field workers who schedule installation jobs for heaters. This company uses the application for the back office and third-party software as a service (SaaS) to schedule appointments. When field workers schedule appointments, they must look up inventory availability to make sure that installation parts are available for the job.

| Decision | Information |

|---|---|

| Is real-time data required? | Yes |

| Peak data volume | 1,000 records per hour |

| Frequency | Ad hoc |

This scenario can be implemented by using a custom service.

In finance and operations:

In the scheduling application:

Note

You can find an example of this type of custom service in the Retail Real Time Services implementation: RetailTransactionServiceInventory::inventoryLookup.

You can also use the inventorySiteOnHand entity to achieve the same result. Sometimes, you can use multiple methods to expose the same data and business logic, and all the methods are equally valid and effective. In this case, choose the method that works best for a given scenario and that a developer is most comfortable with.

Here are some typical scenarios that use batch data APIs.

A company receives a large volume of sales orders from a front-end system that runs on-premises. These orders must periodically be sent to the application for processing and management.

| Decision | Information |

|---|---|

| Is real-time data required? | No |

| Peak data volume | 200,000 records per hour |

| Frequency | One time every five minutes |

This scenario is best implemented by using batch data APIs.

In finance and operations:

In the on-premises system:

A company generates a large volume of purchase orders in finance and operations and uses an on-premises inventory management system to receive products. Purchase orders must be moved from finance and operations to the on-premises inventory system.

| Decision | Information |

|---|---|

| Is real-time data required? | No |

| Peak data volume | 300,000 records per hour |

| Frequency | One time per hour |

This scenario is best implemented by using batch data APIs.

In finance and operations:

In the on-premises inventory system:

It's typical that the application calls out to an external web service that is hosted either on-premises or by another SaaS provider. In this case, the application acts as the integration client. When you write an integration client, you should follow the same set of best practices and guidelines that you follow when you write an integration client for any other application. For a simple example, see Consume external web services.

Important

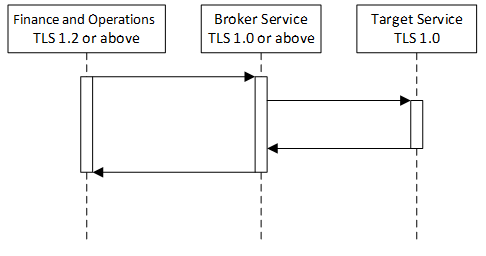

Because of security requirements, production and sandbox environments support only secured communication that uses Transport Layer Security (TLS) 1.2 or later. In other words, the target web service endpoint that the application calls out to must support TLS 1.2 or later. If the target service endpoint doesn't meet this requirement, calls fail. The exception error message resembles the following message:

Unable to read data from the transport connection: An existing connection was forcibly closed by the remote host.

If you can't modify the target service so that it uses TLS 1.2 or later, you can work around this issue by introducing a broker service and making a two-hop call, as shown in the following illustration.

Events

Mar 31, 11 PM - Apr 2, 11 PM

The ultimate Microsoft Fabric, Power BI, SQL, and AI community-led event. March 31 to April 2, 2025.

Register today