I've chacked in on some different algorithms and the issue appears when i'm using n-grams block for getting features. When i'm using feature hashing for example it looks like working well.

Azure ML real-time inference endpoint deloyment stuck on transitioning status

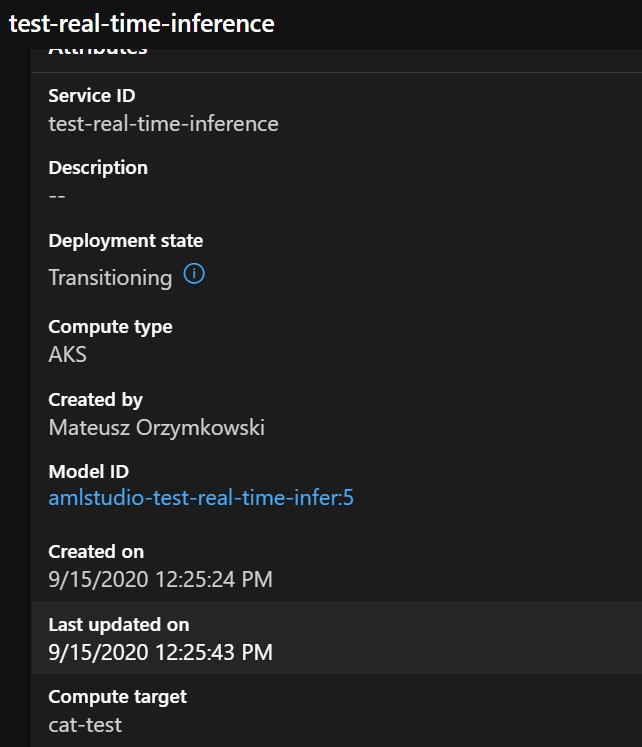

I can't use ml real-time inference endpoint becouse it's stuck on transitioning status (more than 20 hours). Could you help me with that?

10 additional answers

Sort by: Most helpful

-

Bozhong Lin 81 Reputation points Microsoft Employee

2020-09-16T07:50:51.097+00:00 @Mateusz Orzymkowski I will be glad to help you. Deployment stuck on transitioning could be due to container startup issues caused by failure to load model, improper deployment configuration, or code issues in score.py. Could you please give us some further information so we can help:

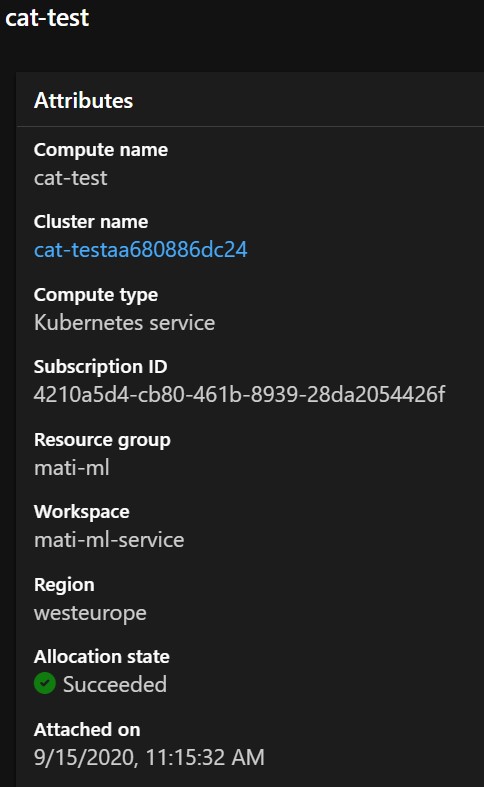

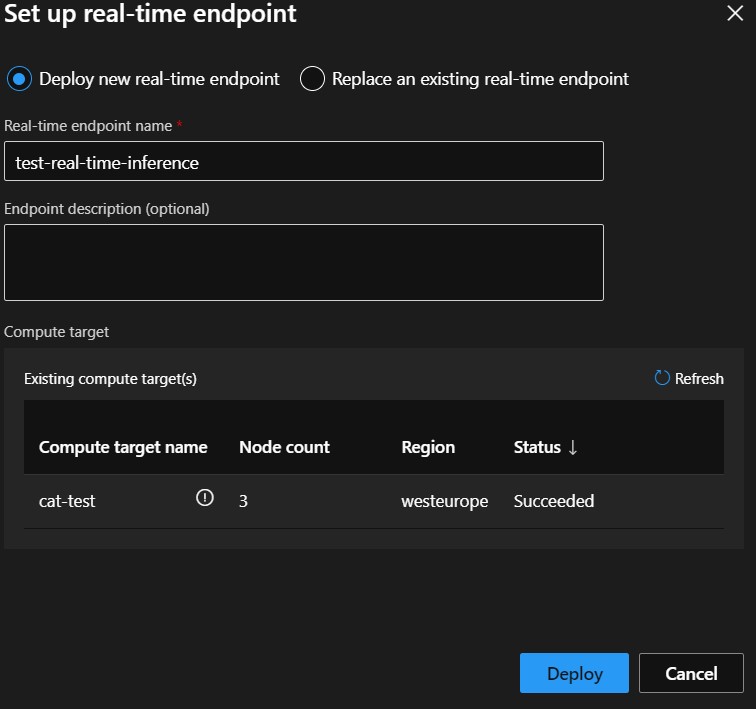

- which compute target did you attempt to deploy, e.g. ACI or AKS

- what's your model size and deployment configuration

- what was the error messages?

Note local debug usually will help you identify one of above issues mentioned.

-

Mateusz Orzymkowski 96 Reputation points

2020-09-16T12:09:10.41+00:00 Hi, thank you for quick resposne. Here are answers for your questions:

There is no error message.

Model size is 95,8MB

If you need more info to resolve that problem, i will be glad to help with it.

-

Chifeng Cai 81 Reputation points Microsoft Employee

2020-09-17T03:28:10.637+00:00 Thanks for reporting this issue. We are working on a fix.

-

Mateusz Orzymkowski 96 Reputation points

2020-09-17T12:10:47.16+00:00 Thank you for your respond. I'm waiting for some news.